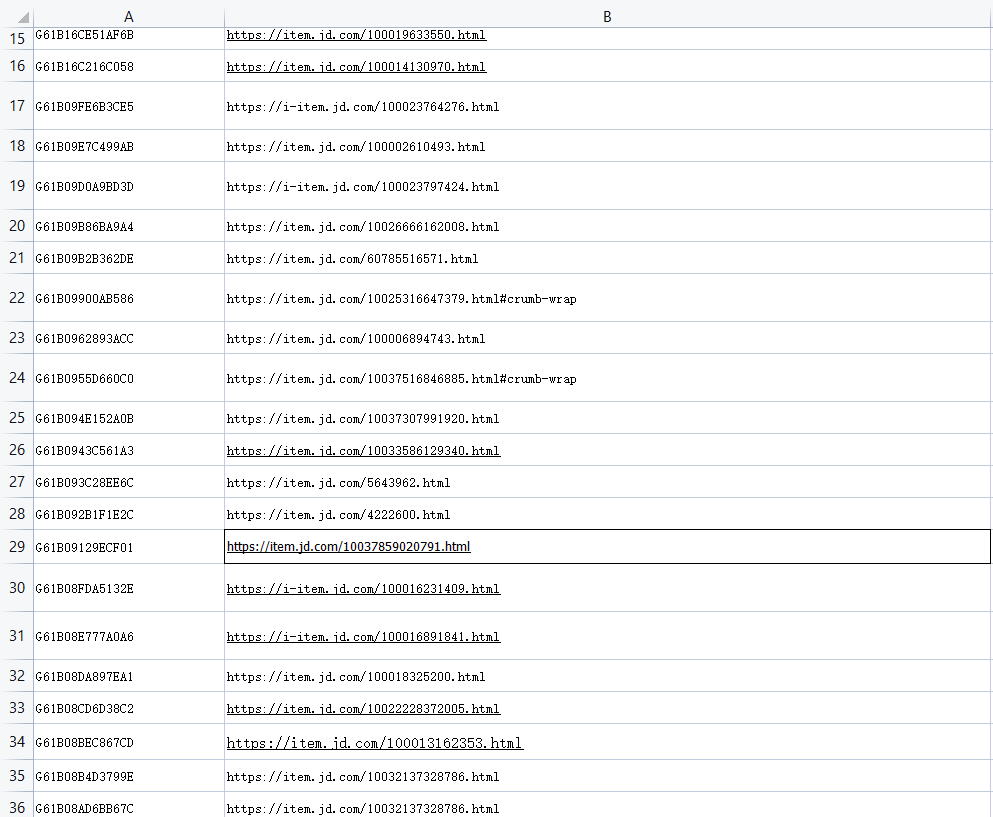

以下是爬取京东商品详情的Python3代码,以excel存放链接的方式批量爬取。excel如下

代码如下

from selenium import webdriver

from lxml import etree

from selenium.webdriver.common.by import By

from selenium.webdriver.common.keys import Keys

import datetime

import calendar

import logging

from logging import handlers

import requests

import os

import time

import pymssql

import openpyxl

import xlrd

import codecs

class EgongYePing:

options = webdriver.FirefoxOptions()

fp = webdriver.FirefoxProfile()

fp.set_preference(\"browser.download.folderList\",2)

fp.set_preference(\"browser.download.manager.showWhenStarting\",False)

fp.set_preference(\"browser.helperApps.neverAsk.saveToDisk\",\"application/zip,application/octet-stream\")

global driver

driver= webdriver.Firefox(firefox_profile=fp,options=options)

def Init(self,url,code):

print(url.strip())

driver.get(url.strip())

#driver.refresh()

# 操作浏览器属于异步,在网络出现问题的时候。可能代码先执行。但是请求页面没有应答。所以硬等

time.sleep(int(3))

html = etree.HTML(driver.page_source)

if driver.title!=None:

listImg=html.xpath(\'//*[contains(@class,\"spec-list\")]//ul//li//img\')

if len(listImg)==0:

pass

if len(listImg)>0:

imgSrc=\'\'

for item in range(len(listImg)):

imgSrc=\'https://img14.360buyimg.com/n0/\'+listImg[item].attrib[\"data-url\"]

print(\'头图下载:\'+imgSrc)

try:

Headers = {\'User-Agent\': \'Mozilla/5.0 (Windows NT 10.0; WOW64; rv:50.0) Gecko/20100101 Firefox/50.0\'}

r = requests.get(imgSrc, headers=Headers, stream=True)

if r.status_code == 200:

imgUrl=\'\'

if item==0:

imgUrl+=code + \"_主图_\" + str(item) + \'.\' + imgSrc.split(\'//\')[1].split(\'/\')[len(imgSrc.split(\'//\')[1].split(\'/\'))-1].split(\'.\')[1]

else:

imgUrl+=code + \"_附图_\" + str(item) + \'.\' + imgSrc.split(\'//\')[1].split(\'/\')[len(imgSrc.split(\'//\')[1].split(\'/\'))-1].split(\'.\')[1]

open(os.getcwd()+\'/img/\'+ imgUrl , \'wb\').write(r.content) # 将内容写入图片

del r

except Exception as e:

print(\"图片禁止访问:\"+imgSrc)

listImg=html.xpath(\'//*[contains(@class,\"ssd-module\")]\')

if len(listImg)==0:

listImg=html.xpath(\'//*[contains(@id,\"J-detail-content\")]//div//div//p//img\')

if len(listImg)==0:

listImg=html.xpath(\'//*[contains(@id,\"J-detail-content\")]//img\')

if len(listImg)>0:

for index in range(len(listImg)):

detailsHTML=listImg[index].attrib

if \'data-id\' in detailsHTML:

try:

details= driver.find_element_by_class_name(\"animate-\"+listImg[index].attrib[\'data-id\']).value_of_css_property(\'background-image\')

details=details.replace(\'url(\' , \' \')

details=details.replace(\')\' , \' \')

newDetails=details.replace(\'\"\', \' \')

details=newDetails.strip()

print(\"详情图下载:\"+details)

try:

Headers = {\'User-Agent\': \'Mozilla/5.0 (Windows NT 10.0; WOW64; rv:50.0) Gecko/20100101 Firefox/50.0\'}

r = requests.get(details, headers=Headers, stream=True)

if r.status_code == 200:

imgUrl=\'\'

imgUrl+=code + \"_详情图_\" + str(index) + \'.\' + details.split(\'//\')[1].split(\'/\')[len(details.split(\'//\')[1].split(\'/\'))-1].split(\'.\')[1]

open(os.getcwd()+\'/img/\'+ imgUrl, \'wb\').write(r.content) # 将内容写入图片

del r

except Exception as e:

print(\"图片禁止访问:\"+details)

except Exception as e:

print(\'其他格式的图片不收录\');

if \'src\' in detailsHTML:

try:

details= listImg[index].attrib[\'src\']

if \'http\' in details:

pass

else:

details=\'https:\'+details

print(\"详情图下载:\"+details)

try:

Headers = {\'User-Agent\': \'Mozilla/5.0 (Windows NT 10.0; WOW64; rv:50.0) Gecko/20100101 Firefox/50.0\'}

r = requests.get(details, headers=Headers, stream=True)

if r.status_code == 200:

imgUrl=\'\'

imgUrl+=code + \"_详情图_\" + str(index) + \'.\' + details.split(\'//\')[1].split(\'/\')[len(details.split(\'//\')[1].split(\'/\'))-1].split(\'.\')[1]

open(os.getcwd()+\'/img/\'+ imgUrl, \'wb\').write(r.content) # 将内容写入图片

del r

except Exception as e:

print(\"图片禁止访问:\"+details)

except Exception as e:

print(\'其他格式的图片不收录\');

print(\'结束执行\')

@staticmethod

def readxlsx(inputText):

filename=inputText

inwb = openpyxl.load_workbook(filename) # 读文件

sheetnames = inwb.get_sheet_names() # 获取读文件中所有的sheet,通过名字的方式

ws = inwb.get_sheet_by_name(sheetnames[0]) # 获取第一个sheet内容

# 获取sheet的最大行数和列数

rows = ws.max_row

cols = ws.max_column

for r in range(1,rows+1):

for c in range(1,cols):

if ws.cell(r,c).value!=None and r!=1 :

if \'item.jd.com\' in str(ws.cell(r,c+1).value) and str(ws.cell(r,c+1).value).find(\'i-item.jd.com\')==-1:

print(\'支持:\'+str(ws.cell(r,c).value)+\'|\'+str(ws.cell(r,c+1).value))

EgongYePing().Init(str(ws.cell(r,c+1).value),str(ws.cell(r,c).value))

else:

print(\'当前格式不支持:\'+(str(ws.cell(r,c).value)+\'|\'+str(ws.cell(r,c+1).value)))

pass

pass

if __name__ == \"__main__\":

start = EgongYePing()

start.readxlsx(r\'C:\\Users\\newYear\\Desktop\\爬图.xlsx\')

来源:https://www.cnblogs.com/Gao1234/p/15784052.html

图文来源于网络,如有侵权请联系删除。

百木园

百木园